1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

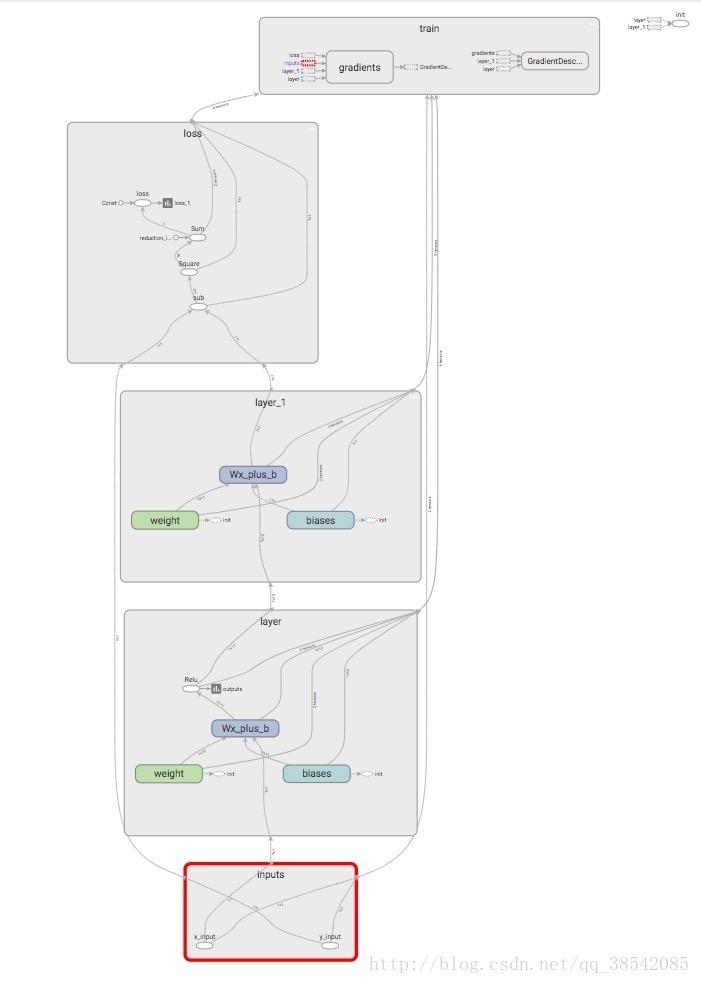

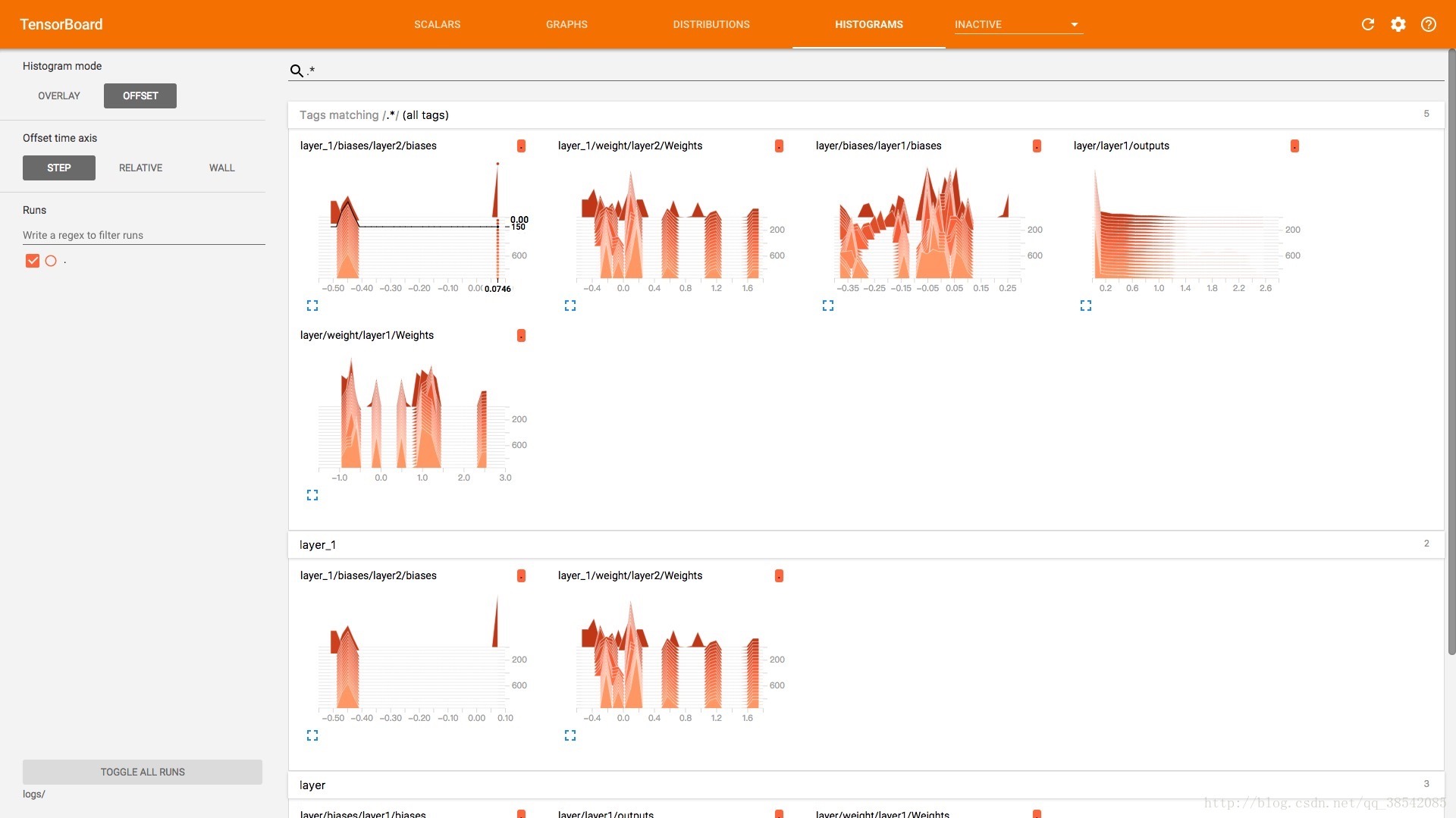

| import tensorflow as tf

import numpy as np

def add_layer(inputs, in_size, out_size, n_layer, activation_function=None):

layer_name = 'layer%s' % n_layer

with tf.name_scope("layer"):

with tf.name_scope("weight"):

Weights = tf.Variable(tf.random_normal([in_size,out_size]),name="W")

tf.summary.histogram(layer_name+'/Weights',Weights)

with tf.name_scope("biases"):

biases = tf.Variable(tf.zeros([1, out_size])+0.1,name="b")

tf.summary.histogram(layer_name+'/biases',biases)

with tf.name_scope("Wx_plus_b"):

Wx_plus_b = tf.matmul(inputs, Weights)+biases

if activation_function == None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

tf.summary.histogram(layer_name+'/outputs',outputs)

return outputs

x_data = np.linspace(-1,1,300)[:,np.newaxis]

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data) - 0.5 + noise

with tf.name_scope("inputs"):

xs = tf.placeholder(tf.float32,[None,1],name="x_input")

ys = tf.placeholder(tf.float32,[None,1],name="y_input")

l1 = add_layer(xs, 1, 10, n_layer=1, activation_function=tf.nn.relu)

prediction = add_layer(l1, 10, 1, n_layer=2, activation_function=None)

with tf.name_scope("loss"):

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1]),name="loss")

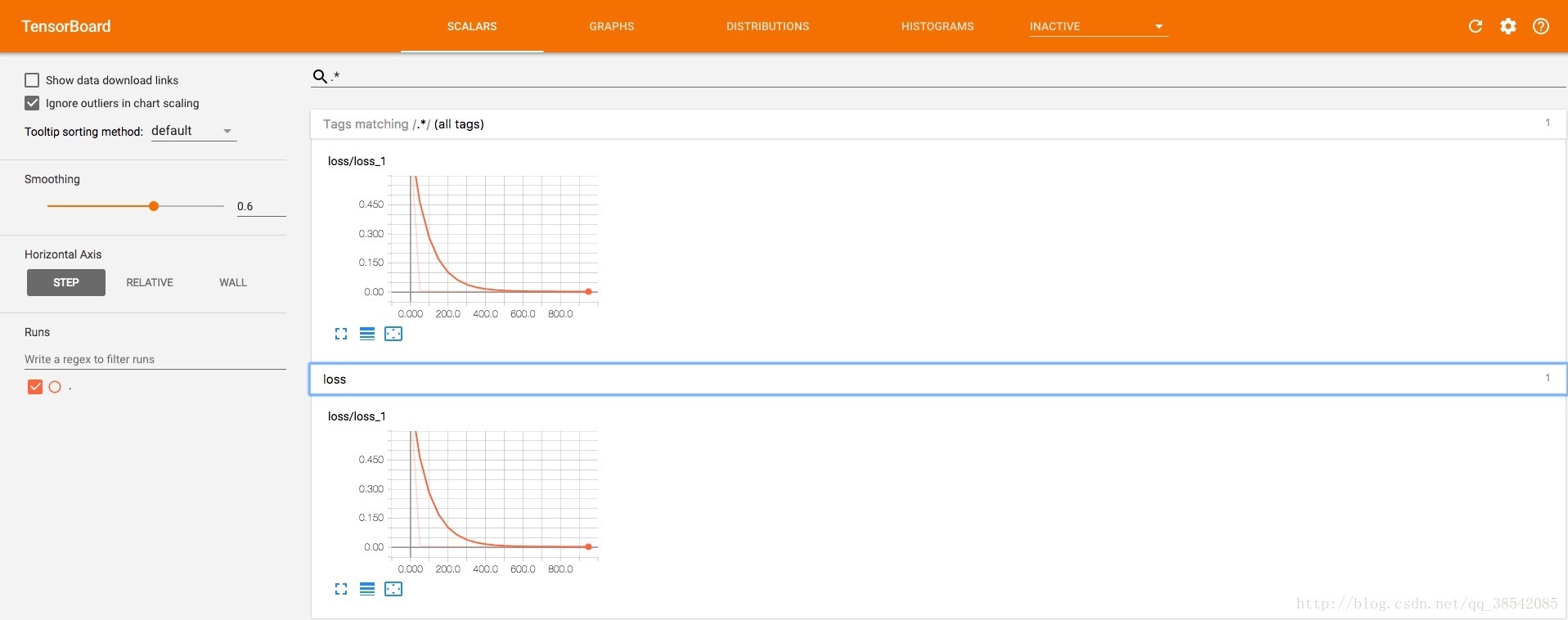

tf.summary.scalar('loss',loss)

with tf.name_scope("train"):

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.global_variables_initializer()

sess = tf.Session()

merged = tf.summary.merge_all()

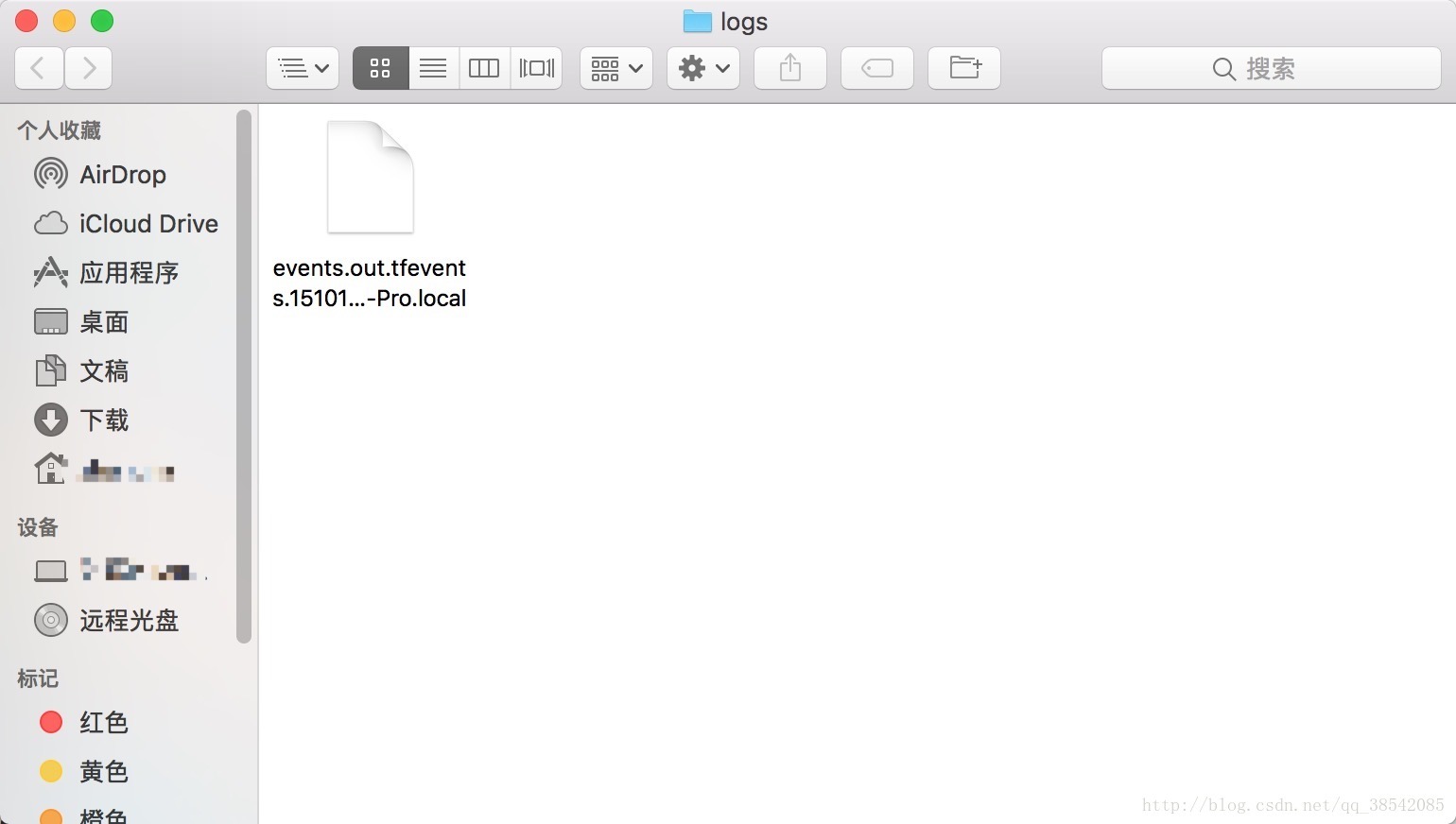

writer = tf.summary.FileWriter("logs/",sess.graph)

sess.run(init)

for i in range(1000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i%50==0:

result = sess.run(merged,feed_dict={xs:x_data,ys:y_data})

writer.add_summary(result,i)

|